Containers. They’re not a fad, or a phase. Their adoption rate is actually pretty accelerated compared to its predecessor, virtualization. In less than two years we’re already seeing interest in deploying app services in containers, which generally points to apps already in production in need of scale, security, and speed to support the high expectations of today’s application experience.

Interestingly, containers are maturing along with their management and orchestration frameworks. Virtualization did not, but then again virtualization preceded cloud which acted as a forcing function on other agile tech to adopt the APIs and templates that enabled the automation and orchestration of cloud to be applied to virtual infrastructure. Today, both containers and virtualization (which could arguably be considered brethren technologies) are generally deployed along with an orchestration framework that coordinates provisioning, scale, and even routing of traffic to and from other apps and services (east-west traffic).

Although within the bounds of these frameworks’ domains these mechanisms work just dandy to provide localized scale and routing, the reality is that these apps and services are part of a bigger view; a view that includes a more comprehensive “app” that requires interaction with users (north-south traffic). A late 2016 survey by Portworx found that the second most popular workload planned for containerization was “web front ends” with 48% of respondents.

That almost guarantees that there is an upstream service (or device, if you prefer) providing scale, security, and speed between users and the “app” comprised of services deployed within a containerized environment.

Invariably, that means there should be coordination between the container orchestration framework and that upstream device/service.

Enter F5 Container Connectors. When we first announced Container Connectors, we were putting the finishing touches on our Mesos support. Today, we’re excited to be releasing support for Kubernetes, too.

F5 Container Connector

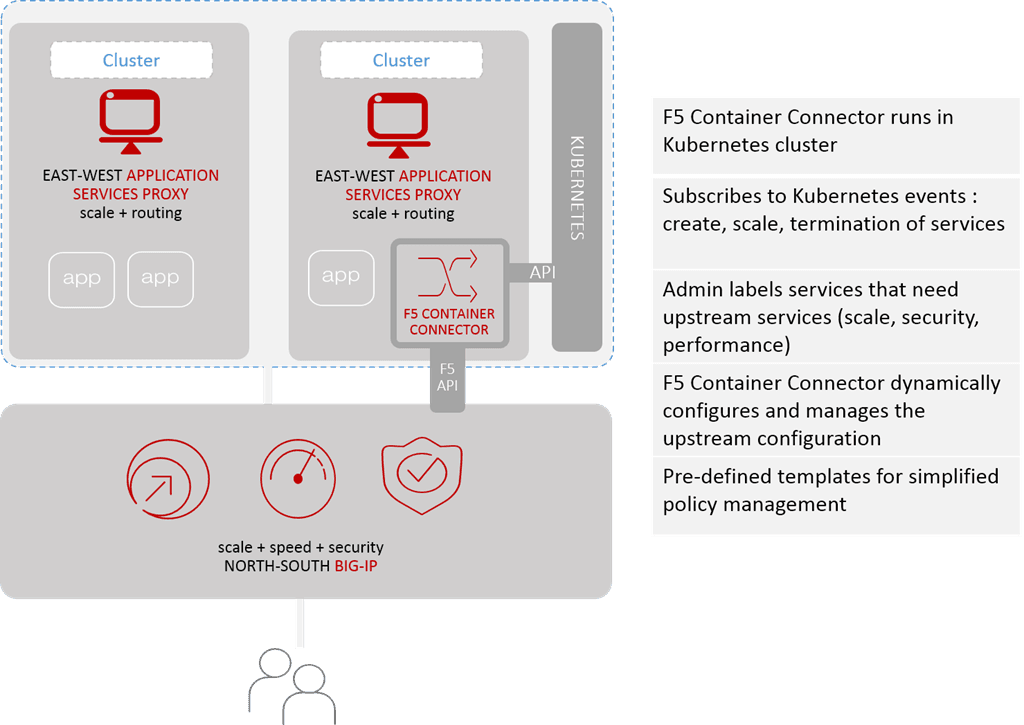

What the F5 Container Connector provides is the integration required to bridge the last, internal mile between where north-south traffic turns into east-west traffic. It’s where the upstream (from where Kubernetes sits) BIG-IP hands off requests to the appropriate local proxy (which could be our own Application Services Proxy or in the case of Kubernetes, Kube-Proxy, for example) or pod to be processed.

Then it waits for a response, and performs its own assigned tasks like data scrubbing (to prevent data leaks), image optimization (to improve performance) and sends it on its way back to the user.

The key component is the Container Connector, which resides in a Kubernetes cluster and subscribes to events like other components. When it sees an appropriate event, it dynamically configures or modifies an existing configuration on the upstream (BIG-IP) configuration with the appropriate details. That might be scaling up by adding new members to a cluster/pool, or it might be scaling down – taking them out again.

The most important facet is that it’s integrated and occurs automagically, which means consistent application of security policies at the north-south ingress/egress without further complicating the containerized environment. It also encourages the use of SSL/TLS to secure app (and API) traffic with users by providing a single point at which to manage and maintain certificates. That also keeps the containerized environment free of additional operational complexity, a common complaint when containers and their associated app architectures are introduced.

What F5 Container Connectors provide is the ability to automatically provision and manage the app services required for containerized apps in a production environment, without requiring massive overhauls to the environment that already existed in development and test (you did test, right?). It supports the notion of frictionless deployments and preserves the notion of portability so important to containerized applications today.

F5 is committed to ensuring that the delivery and security services apps need are available in every kind of environment whether that’s containers or virtual machines, in any cloud or a traditional environment. But we’re also committed to making those services as easy and painless to provision and manage as possible, and that means solutions that fit into all kinds of architectures.

To support DevOps toolchains we've made the Container Connectors and our version of kube-proxy freely available at https://hub.docker.com/r/f5networks/and an open source version of our Kubernetes controller can be grabbed from our git repository at https://github.com/F5Networks/k8s-bigip-ctlr

About the Author

Related Blog Posts

Why sub-optimal application delivery architecture costs more than you think

Discover the hidden performance, security, and operational costs of sub‑optimal application delivery—and how modern architectures address them.

Keyfactor + F5: Integrating digital trust in the F5 platform

By integrating digital trust solutions into F5 ADSP, Keyfactor and F5 redefine how organizations protect and deliver digital services at enterprise scale.

Architecting for AI: Secure, scalable, multicloud

Operationalize AI-era multicloud with F5 and Equinix. Explore scalable solutions for secure data flows, uniform policies, and governance across dynamic cloud environments.

Nutanix and F5 expand successful partnership to Kubernetes

Nutanix and F5 have a shared vision of simplifying IT management. The two are joining forces for a Kubernetes service that is backed by F5 NGINX Plus.

AppViewX + F5: Automating and orchestrating app delivery

As an F5 ADSP Select partner, AppViewX works with F5 to deliver a centralized orchestration solution to manage app services across distributed environments.

F5 NGINX Gateway Fabric is a certified solution for Red Hat OpenShift

F5 collaborates with Red Hat to deliver a solution that combines the high-performance app delivery of F5 NGINX with Red Hat OpenShift’s enterprise Kubernetes capabilities.