This blog post is the sixth in a series about AI data delivery.

Artificial intelligence pipelines do not end when data reaches a model. In many ways, that’s where the hardest work begins.

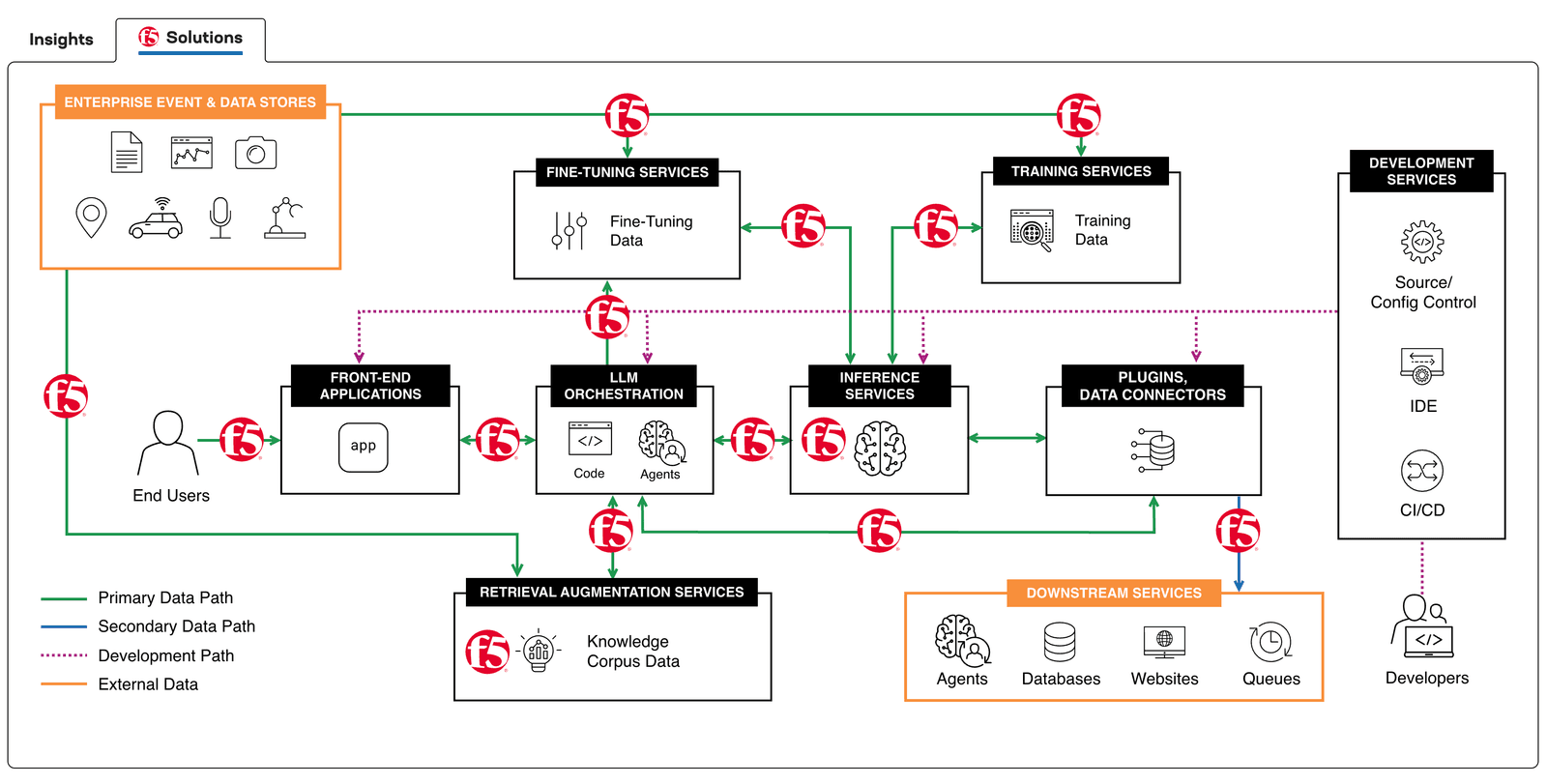

After data has been ingested, transformed, and delivered for training or inference, organizations must still incorporate the data into AI applications to users, partners, and systems at scale. These applications are now supercharged for customer experiences, automating workflows, and informing decisions in real time. As a result, availability, performance, security, and governance continue to be top application delivery concerns.

Delivering AI applications reliably requires more than the latest and greatest models or infrastructure. It requires a control layer that can manage AI traffic intelligently, protect scarce resources, and adapt to highly dynamic environments.

This is where the application delivery controller (ADC) continues to play a central role

Why AI application delivery is different

Traditional applications typically serve short-lived, predictable requests. AI applications behave much differently. As I’ve travelled the world and spoken to many people who have built AI applications, I’ve picked up on some commonalities across these conversations.

AI traffic is often bursty and uneven. Requests may include large prompts and context. Responses may stream continuously rather than returning all at once. A single request can fan out to engage multiple services, including retrieval systems, embedding services, and downstream tools. Behind the scenes, inference workloads consume expensive and capacity-constrained resources such as GPUs.

AI applications also introduce a new notion of sessions. Session data is no longer held within a single server; instead, it is found in the context being passed in the prompt and response. While this alleviates burden on the inference servers, it means that an incredible amount of intelligence needs to be built at the application delivery layer. The ADC is now integral to the application.

Failures in AI systems are also rarely binary. An inference cluster may be running but overloaded on certain nodes. A retrieval service may be responding slowly. A specific region may be saturated while others remain healthy. Without intelligent delivery controls, these partial failures quickly degrade user experience and drive up costs.

At scale, AI application delivery becomes a systems problem, not just a networking one.

The ADC as the control plane for AI apps

An ADC provides a centralized control plane that sits between clients and AI services. Rather than simply forwarding traffic, it actively governs how AI applications are consumed.

Platforms such as F5 BIG-IP manage AI traffic across hybrid and cloud-native environments, consistently enforcing policies while adapting to changing conditions in real time. F5 BIG-IP is both an ADC and a core component of the F5 Application Delivery and Security Platform.

In the F5 AI reference architecture, BIG-IP acts as the front door to AI applications. It brokers access to model endpoints, retrieval services, and supporting APIs, ensuring that traffic is routed efficiently, secured appropriately, and observed continuously.

Intelligent traffic management for AI workloads

AI application delivery places new demands on traffic management. Simple round-robin load balancing is no longer sufficient. (We discuss why AI storage demands a new approach to load balancing in this previous series blog post.)

ADCs enable intelligent request steering based on backend health, geographic proximity, capacity, and semantic policy. Requests can be routed to specific model versions, inference pools, or regions, enabling teams to introduce new models safely through canary deployments or phased rollouts. When a backend degrades, traffic can be redirected automatically without waiting for a full outage.

This approach is critical when backend resources are scarce and costly. Instead of overprovisioning GPUs to absorb spikes, organizations can use ADC-level controls to smooth traffic, steer requests away from busy nodes, and protect overall system stability.

Security and governance at the AI front door

Every AI application endpoint is still an application endpoint, and often an attractive one for cybercriminals.

As AI adoption grows, so do new forms of abuse. Cost-exhaustion attacks, automated scraping, prompt flooding, and unauthorized access to premium models are becoming common concerns. These risks cannot be addressed solely within the model itself.

ADCs provide a natural enforcement point for security and governance controls. Authentication, authorization, rate limiting, API protection, and model protections can all be applied consistently before traffic reaches AI services. This reduces risk while preserving flexibility for application teams.

Importantly, ADCs can be an ideal place to incorporate governance objectives. Organizations can enforce tenant-level quotas, prioritize critical workloads, and align AI usage with policy and budget constraints without rewriting applications or retraining models.

Performance optimization for interactive AI

As AI becomes more interactive, performance becomes visible to users.

Latency spikes, stalled responses, and dropped streams immediately erode trust. ADCs help optimize performance by managing long-lived connections, offloading TLS overhead, and managing streaming protocols efficiently. They can detect backend saturation early and reroute traffic before users notice degradation.

By separating delivery concerns from application logic, teams gain the ability to tune performance dynamically as workloads evolve.

Service discovery and Kubernetes integration

Most AI applications run on Kubernetes, where services scale dynamically and endpoints change frequently.

ADCs integrate with Kubernetes to discover services automatically, track scaling events in real time, and route traffic to the correct pods or services as they appear and disappear. Kubernetes has constructs that allow for inference intelligence, which F5 takes advantage of. This integration allows F5 to extend consistent delivery and security policies beyond the cluster, across hybrid and multicloud environments.

For AI workloads, this external control plane is especially valuable. It decouples traffic management and policy enforcement from individual clusters, enabling centralized visibility and governance even as AI services scale rapidly and independently.

Why F5 for AI application delivery

F5 brings together traffic management, security, and observability in a single, proven platform. For AI applications, this means predictable performance, stronger protection of expensive backend resources, and consistent governance across environments.

By operating at the delivery layer, F5 enables organizations to scale AI safely without slowing innovation. Teams can introduce new models, support new use cases, and expand across clouds while maintaining control over cost, risk, and reliability. (Learn more about F5 and its role in AI application delivery).

From refined fuel to reliable delivery

Previously in our series on AI data delivery, we compared the AI journey to an oil pipeline extraction where ingestion, transformation, and delivery prepared the fuel. Data was extracted, refined, and made ready for use.

AI application delivery is the final step in that journey. It is the distribution network that ensures refined fuel actually reaches engines safely, efficiently, and at scale. Pumps, valves, metering, and monitoring all matter just as much as refining capacity.

Application delivery controllers provide that control layer for AI. They regulate flow, prevent overload, enforce policy, and maintain service levels as demand grows. Without them, even the most advanced models struggle to deliver consistent business value.

At scale, AI success is not only about what models can do. It is about how reliably those capabilities are delivered.

F5 delivers and secures AI applications anywhere

For more information about our AI data delivery solutions, visit our AI data delivery and infrastructure solutions webpage. Also, stay tuned for the final post in our AI data delivery series focusing on securing the AI data pipeline: privacy, compliance, and resilience.

F5’s focus on AI doesn’t stop with data delivery. Explore how F5 secures and delivers AI apps everywhere.

Be sure to check out our previous blog posts in the series:

Fueling the AI data pipeline with F5 and S3-compatible storage

Optimizing AI by breaking bottlenecks in modern workloads

Tracking AI data pipelines from ingestion to delivery

Why AI storage demands a new approach to load balancing

Best practices for optimizing AI infrastructure at scale

About the Author

Related Blog Posts

Responsible AI: Guardrails align innovation with ethics

AI innovation moves fast. But without the right guardrails, speed can come at the cost of trust, accountability, and long-term value.

Best practices for optimizing AI infrastructure at scale

Optimizing AI infrastructure isn’t about chasing peak performance benchmarks. It’s about designing for stability, resiliency, security, and operational clarity

Datos Insights: Securing APIs and multicloud in financial services

New threat analysis from Datos Insights highlights actionable recommendations for API and web application security in the financial services sector

Tracking AI data pipelines from ingestion to delivery

Enterprise data must pass through ingestion, transformation, and delivery to become training-ready. Each stage has to perform well for AI models to succeed.

Secrets to scaling AI-ready, secure SaaS

Learn how secure SaaS scales with application delivery, security, observability, and XOps.

How AI inference changes application delivery

Learn how AI inference reshapes application delivery by redefining performance, availability, and reliability, and why traditional approaches no longer suffice.