Scalability is a critical capability for both business and applications. On the business side, scaling operations is key to enabling the new app economy and its nearly constant state of frenetic deployment. On the application side, scalability is how we support the growth of consumer and corporate populations alike, ensuring top notch performance and availability to both.

At the heart of scalability is load balancing. Since the mid-1990s, load balancing has been the way in which we scale applications by distributing workloads across increasingly bigger farms of servers, networks, applications, and services.

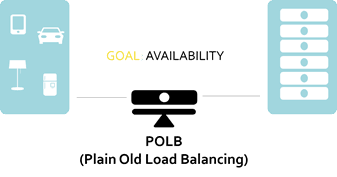

In the old days, load balancing was all about the network. Building on existing techniques and protocols that scaled out networks, load balancing focused on those aspects of client-server communication that could be used to distribute workloads. Plain Old Load Balancing (POLB) was born.

It worked, and worked well. Without POLB it’s nigh impossible to know whether the web as we know it would have come to fruition. It was – and is – still critical to supporting today’s corporate and consumer population as well as being able to support tomorrow’s.

Sometime in the early 2000s the strategic location of the load balancer made it the perfect platform to expand to other application-experience enhancing features. Application acceleration, optimization, caching, and compression were eventually added. Security, too, would see its way onto this same platform, its position in the network too perfect to ignore. As the gatekeeper to applications, its ability to inspect, extract, modify, and transform application traffic gives it unique insights that apply as equally to security as it does performance.

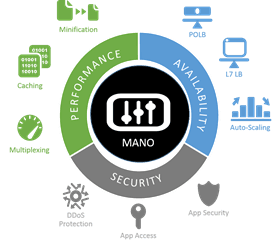

Sometime in the early 2000s we went beyond POLB into something else. Load balancers became application delivery platforms, as competent in delivering secure, fast, and available apps as it had been just scaling them with POLB.

The ADC (application delivery controller) as these modern platforms are known, are able to not only offer up POLB capabilities, but a veritable cornucopia of capabilities that make it invaluable to the folks who today are tasked with not only deploying apps but delivering them as well.

Increasingly that is not the network teams, but architects and operations.

That shift has been driven by a number of technologies arising in the past decade. From agile to cloud to DevOps to mobile, best practices today are pushing some application services out of the network and into the domain of applications.

And throughout the shifting of responsibility for scalability from the network to architects and ops, the ADC is being deployed as though it’s stuck in the 1990s. Treated as a POLB, the bulk of the value it offers architects and ops in improving performance and security is left on the table (or more apropos, on the rack).

That’s usually because the architects and operations teams now responsible for scaling applications aren’t familiar with just what an ADC can do for them and, more importantly, their apps and architectures. It’s time we change that and go beyond POLB.

Beyond POLB: L7 LB Proxy

The goal of POLB was simple: availability. Whether that was through implementation of a HA (High Availability) architecture based on redundancy or using an N+1 scaling architecture the goal was the same: keep the web site (or app) up and available, no matter what.

Today the goal is still availability, but it’s coupled with efficiency and agility. All three are key characteristics of modern business and the architectures that support its critical applications. To get there, however, we have to go beyond POLB, to a world of application routing and load distribution that brings those critical efficiencies to both app architectures and infrastructure. These capabilities are based on layer 7 of the network stack –the application layer – and in modern architectures that means HTTP.

L7 LB Proxies are capable of not just distributing load based on application variables like connection load, response times, and application status, but also dispatching (routing) based on URLs, HTTP headers, and even data within HTTP messages.

Able to parse, extract, and act upon these various types of application layer data, L7 load balancers today can participate in and augment applications and microservice architectures in a variety of ways, including:

- Data Partitioning (Sharding) Architectures

- Complex URL dispatch

- Manipulate response headers

- Dynamic routing based on backend data

- Scaling by Functional Decomposition

- API metering and access control

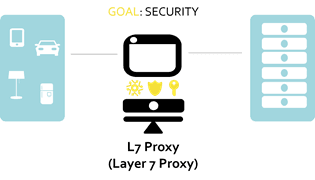

Beyond POLB: Security

Because a L7 LB is logically positioned upstream from the applications it services, it has visibility into each and every request – and response. This means it’s capable of performing a variety of checks and balances on requests before they’re allowed to proceed to the desired application. These checks and balances are vital in ensuring that malicious traffic is detected and rejected, keeping their adverse effects from impacting the stability, availability, and integrity of backend applications.

But it’s more than just stopping bad traffic from reaching servers, it’s also about stopping bad actors. That means being able to identify them – and not just those without valid credentials, but those who are using credentials that don’t belong to them in the first place. The role of access control has been growing as the number of breaches resulting from stolen credentials has been on the rise. Cloud, too, has re-introduced an urgency to manage credentials globally across applications, to reduce the potential risk from orphaned and test accounts that grant access to corporate data stored in SaaS-based applications. Identity federation has become more than just a productivity-improvement strategy, it’s become a key tactic in the overall corporate security strategy.

Capabilities that can be added to an L7 LB include both those that analyze the behavior and identity of clients as well as the actual content of messages being exchanged between clients and backend services. This enables the L7 LB to perform functions inclusive of:

- Layer 7 (App) DDoS Protection

- Web app firewalling

- OWASP Top 10

- App access control

- Federated identity

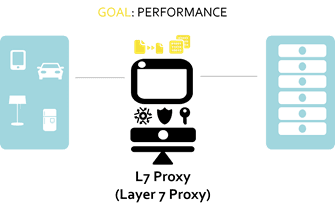

Beyond POLB: Optimization

Performance is a critical component for application – and therefore business – success. In earlier days, organizations would deploy multiple application acceleration solutions in front of applications to speed up the delivery process. ADCs incorporated these same solutions as optional (add on) components, but eventually integrated these capabilities directly as part of the core load balancing capabilities; a reflection of the importance of performance to its core focus on delivering applications and services.

So it is that the L7 LB has a variety of features and functions focused on improving performance. Some of those functions – in particular those that act on app responses headed back to the client – are focused on the content. Many of these are core developer techniques used to make content smaller while others are focused on decreasing the number of round trips between the client and server required.

Too, it’s important to note that L7 proxies are not just focused on optimizing content, but also key enablers of performance-focused architectures that include database load balancing techniques as well as the integration of in-memory caches (like memcached) to improve overall application performance. Those L7 proxies that are well-suited to enabling these architectures are generally enabled with a data path scripting languagethat provides the ability to tailor distribution and routing.

Other capabilities are focused on reducing overhead on backend servers as a way to improve performance, as well as being able to distribute load based on performance thresholds.

Optimizing features and capabilities of an L7 LB include:

Client side (Response Optimization) | Backend Server Side |

• Minification • HTTP Compression • Buffering • Script aggregation • SSL Offload | • TCP Multiplexing • Caching • Performance-based load distribution • Auto-scalability |

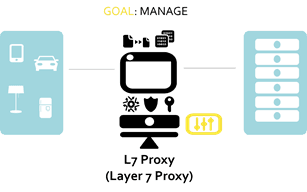

Beyond POLB: Manage

If any infrastructure is to be more integrated into the application architecture it absolutely must be able to be integrated into the CI/CD pipeline. That means being supportive of modern, DevOps and Agile related methodologies and the toolsets that enable the execution of automated release and delivery strategies.

To that end, L7 LB must provide not only APIs through which it can be managed, but also the means through which it can be monitored and deployed to ensure continuous feedback and delivery is possible to meet today’s demanding schedules and budgets.

F5 supports a variety of programmatic options for provisioning, deploying, managing, and monitoring the L7 LB infrastructure including:

- REST and SOAP APIs

- Plug-ins for Chef, Puppet, Cisco, VMware, Electric Cloud, New Relic, Nagios, Ansible, Urban {code}, and more

- Smart templates

Beyond POLB: It’s Time

There are a variety of reasons why responsibility for application infrastructure like POLB and L7 LB are being shifted from the network teams to the application and operations’ teams. Whether it’s the adoption of agile or DevOps as a response to business demand for more apps with shorter delivery timelines or the need to scale more rapidly than ever before, going beyond POLB will provide a greater array of options for security, performance, and availability needed to support modern app and delivery architectures on a single, manageable, programmable platform that fits into the modern CI/CD pipeline.

It’s time to consider going beyond POLB and start taking advantage of the infrastructure that’s increasingly falling into your domain.

About the Author

Related Blog Posts

Why sub-optimal application delivery architecture costs more than you think

Discover the hidden performance, security, and operational costs of sub‑optimal application delivery—and how modern architectures address them.

Keyfactor + F5: Integrating digital trust in the F5 platform

By integrating digital trust solutions into F5 ADSP, Keyfactor and F5 redefine how organizations protect and deliver digital services at enterprise scale.

Architecting for AI: Secure, scalable, multicloud

Operationalize AI-era multicloud with F5 and Equinix. Explore scalable solutions for secure data flows, uniform policies, and governance across dynamic cloud environments.

Nutanix and F5 expand successful partnership to Kubernetes

Nutanix and F5 have a shared vision of simplifying IT management. The two are joining forces for a Kubernetes service that is backed by F5 NGINX Plus.

AppViewX + F5: Automating and orchestrating app delivery

As an F5 ADSP Select partner, AppViewX works with F5 to deliver a centralized orchestration solution to manage app services across distributed environments.

F5 NGINX Gateway Fabric is a certified solution for Red Hat OpenShift

F5 collaborates with Red Hat to deliver a solution that combines the high-performance app delivery of F5 NGINX with Red Hat OpenShift’s enterprise Kubernetes capabilities.